building a drone for autonomous exploration in 3 months

Thanks to University of Toronto's Engineering Science Robotics Capstone and team members

Misha Bagrianski,

Peishuo Cai, and

Yiqun Ma.

objective

We were interested in creating an exploration drone for Search and Rescue (SAR) in instances of infrastructure failure, such as post-earthquake zones.

The objective of the drone is to search for openings in infrastructure rubble, explore the interior of otherwise inaccessible or unstable areas, and be the first to identify the presence of stranded people.

Subsequently, the drone will drop packages near the victims, such as first-aid supplies, radio for communication, or beacon for alerting rescuers.

We wanted to prototype the following unique key features:

- Autonomous exploration in GPS-denied environments using only onboard sensors

- Multi-package delivery mechanism for dropping supplies

- Visualization of the explored environment for debriefing with rescuers

timeline

| Week 00-02 | Hardware Assembly, Manual Takeoff |

| Week 02-05 | Autonomous Takeoff and Landing |

| Week 05-06 | Autonomous Waypoint Following |

| Week 06-10 | Vision and Planning Algorithms |

| Week 10-13 | Testing, Debugging |

1. Autonomous Exploration

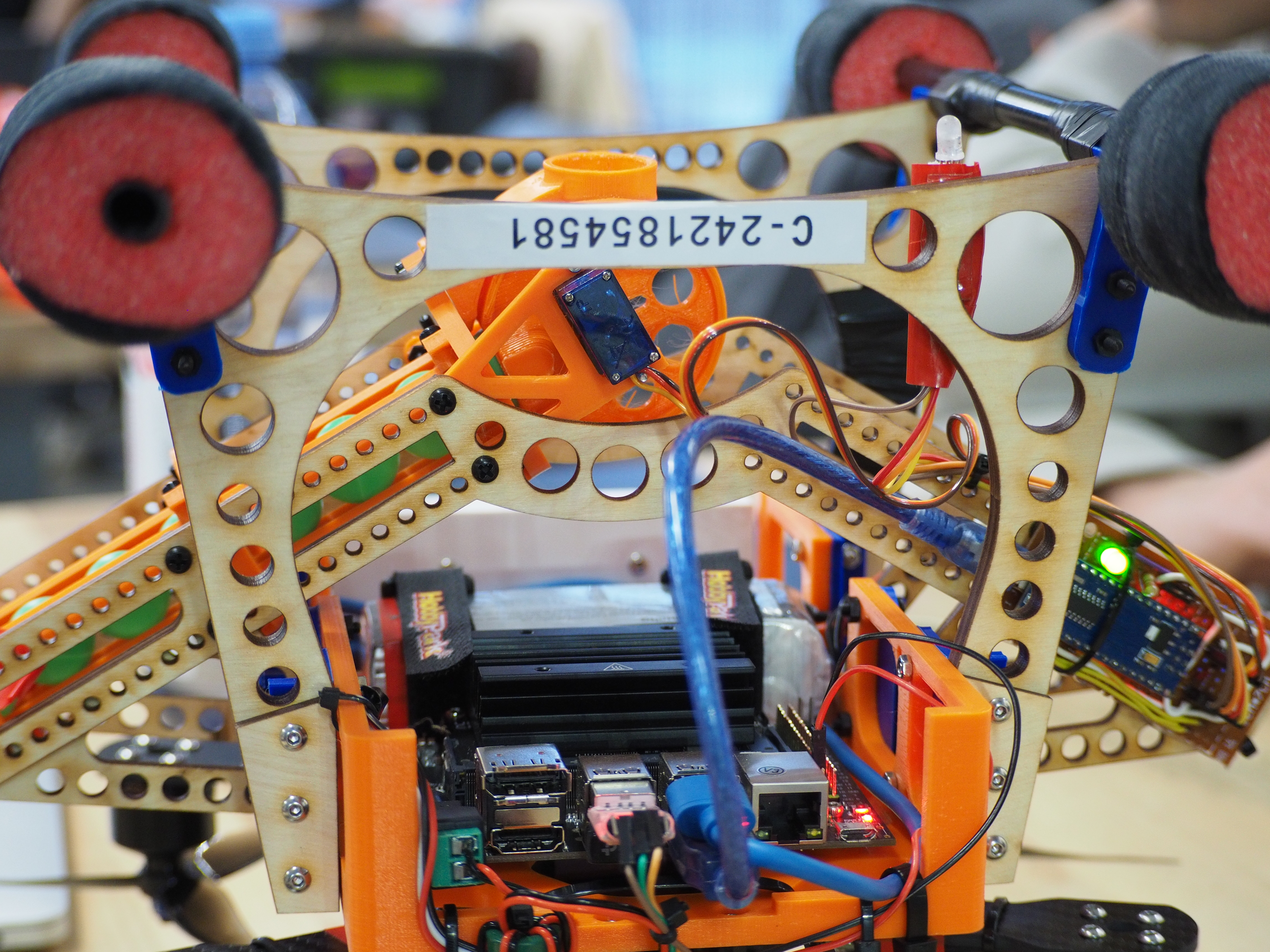

Our autonomy software stack is built on ROS2 and PX4 autopilot software, with MAVROS as a communication bridge.

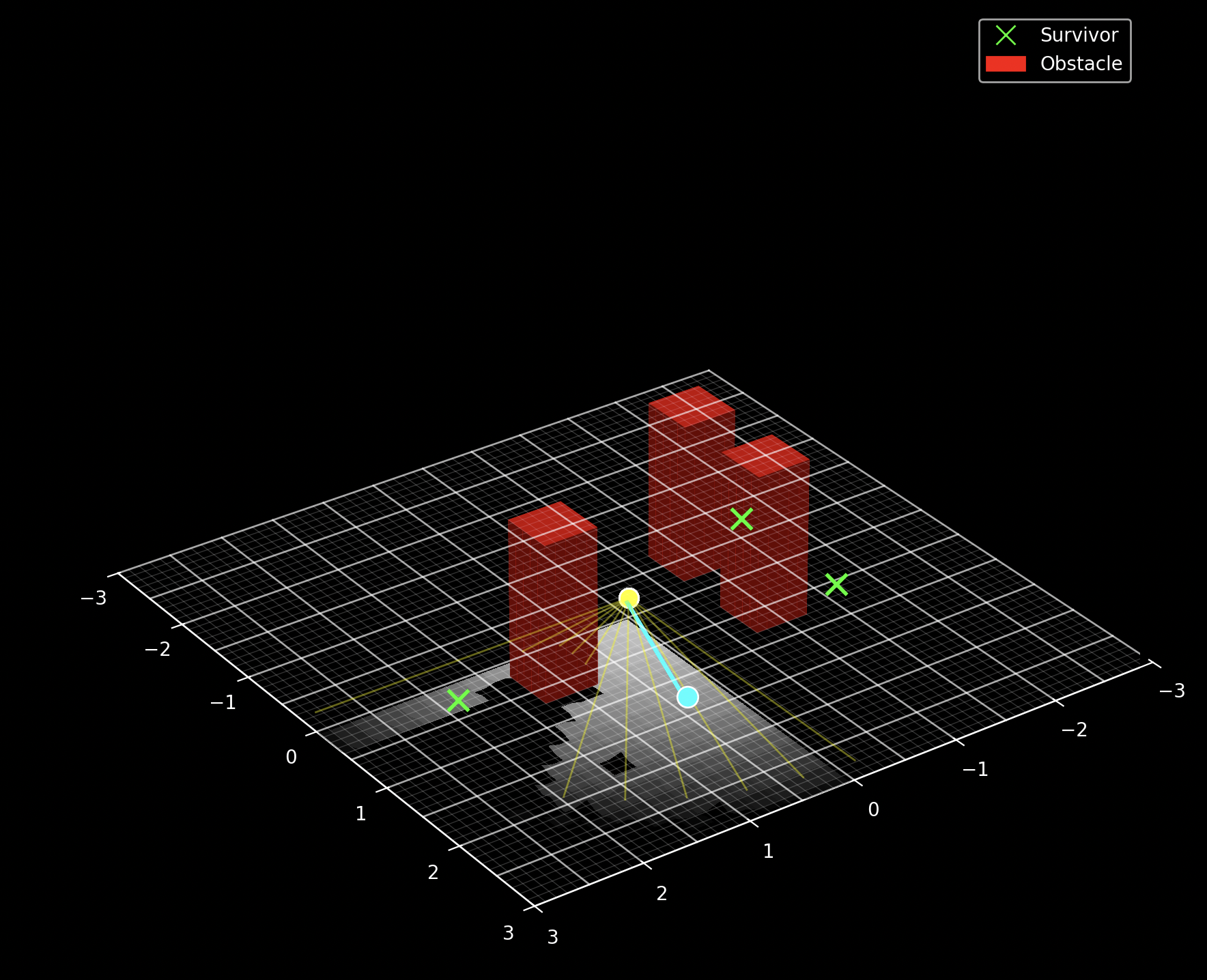

We initialize an occupancy grid representation of the environment defined on an 8-bit signed integer space. The maximum value, 127, is defined as occupied with 100% certainty, while the minimum value, -128, is defined as free with 100% certainty. A value of 0 indicates complete uncertainty. We then populate the grid using raycasting from the drone's camera and AprilTags for marking targets and obstacles. Though a full active SLAM algorithm would be ideal, our prototype was constrained by time and hardware limitations.

Shannon entropy is used to measure the uncertainty of a probability distribution for random variable \( X \): \[ H(X) = -\sum_{x\in\mathcal{X}} p(x) \log_2 p(x) \] Where \( p(x) \) denotes the probability of outcome \( x \) from the sample space \( \mathcal{X} \). For a cell in an occupancy grid, this reduces to: \[ H(c)=-\left[p(c)\log_2p(c) + (1-p(c))\log_2(1-p(c)) \right] \] Where \( p(c) \) is the probability that a cell \( c \) is occupied. Note that entropy is maximized at \( p_c=0.5 \) and zero at \( p_c=0 \) or \( p_c=1 \), as desired in our occupancy grid formulation.

For a candidate pose \( i \), we cast \( N=200 \) rays from the camera to cover the field of view. For the \( k \)-th ray, let the set of grid cells intersected by the ray be \( \mathcal{C} \). Then, the entropy accumulated across all cells seen by the drone from the candidate view at the pose \( \hat q_i \) is given by: \[ H(\hat q_i) = \sum_{k=1}^N \sum_{c\in \mathcal{C}} H(c) \] We sample \( M=2000 \) candidate views (drone poses) from a Gaussian distribution about the current pose with a mean of 2 m for position and uniformly for the orientation. Using current pose \( q_t=(x_t,y_t,\theta_t) \in SE(2) \): \[ \begin{align} \hat x_{i+1} &\sim x_t + \mathcal{N}(2,1) \\ \hat y_{i+1} &\sim y_t + \mathcal{N}(2,1) \\ \hat \theta_{i+1} &\sim U(0, 2\pi) \end{align} \] The Next Best View pose \( q_{t+1} \) is then given by the candidate pose with the highest total entropy as a measure of the expected information gain: \[ q_{t+1} = \operatorname*{argmax}_{\hat q_i \in \{\hat q_1, \hat q_2, \dots, \hat q_M \}} H(\hat q_i) \] To navigate from the drone’s current pose \( q_t \) to a designated subgoal \( q_{t+1} \), we employ A* search over a thresholded copy of the occupancy grid. If a survivor is detected from the current pose, we set their location as the new subgoal and send it to the PX4 autopilot. Once the drone reaches the subgoal, it drops a package. Otherwise, the Next Best View algorithm will be used to select the next subgoal.

2. delivery mechanism

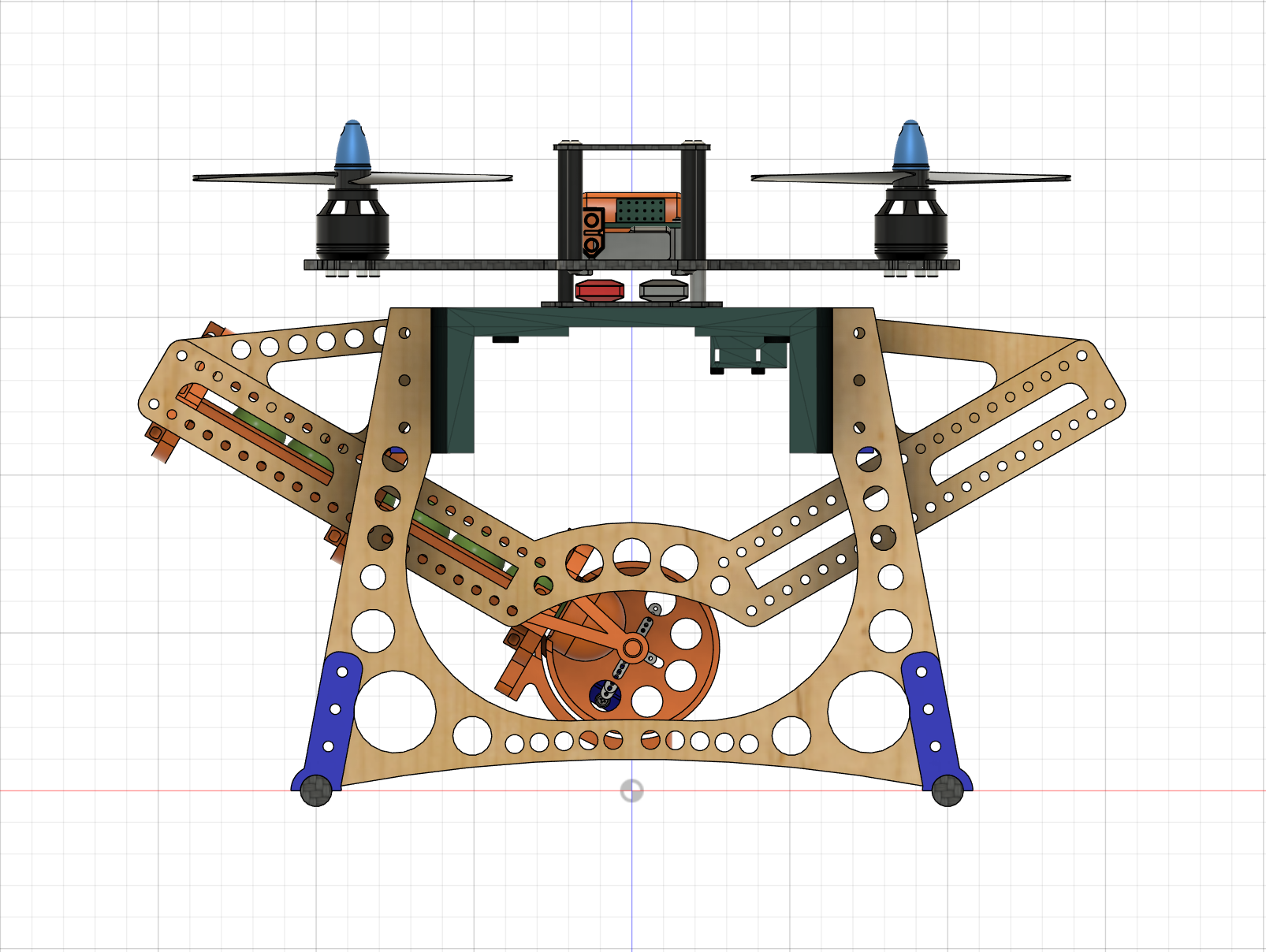

3D printed dropping mechanism with servo motor and laser cut wooden frame.

3. visualization

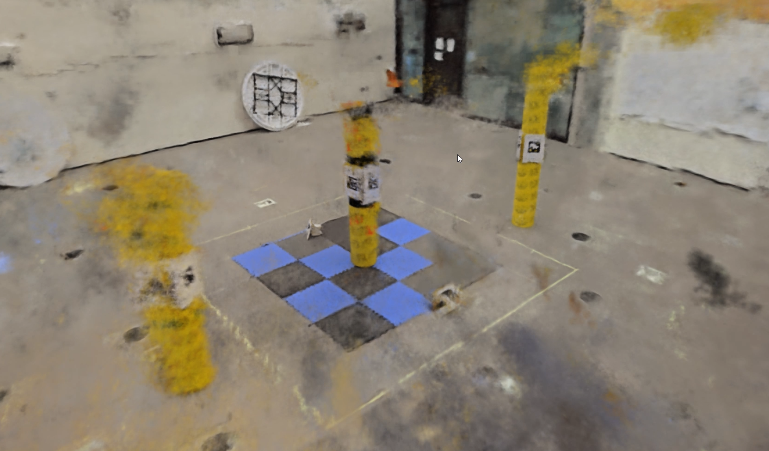

After completing the exploration and locating the survivors, the drone uploads a video log of its trajectory to an offboard server once a connection is available. To enhance situational awareness and generate an accurate map of the rescue environment, we reconstruct the traversed environment using COLMAP and Instant-NGP.

results

Code: https://github.com/AndrewZL/rho

Video: